UPDATE 2015-07-02: the Dutch government released the intelligence bill into public consultation. Details here.

UPDATE 2015-06-01: changed “goal-oriented” to “purpose-oriented” everywhere, including in the (translated) diagram; it’s a better, less confusing translation (credits to A).

In the Netherlands, non-specific interception (Dutch: “ongerichte interceptie”; alternative English translation might include “untargeted interception”, “unselected interception” or “bulk interception”) by Dutch intelligence services is interception without a priori specifying the identity of a person or organization, or technical characteristics (IMEIs, IPs, phone numbers, etc.). The legal basis for non-specific interception currently is Article 26 and 27 of the intercepting large quantities of foreign PSTN traffic. This constituted foreign intelligence collected for purposes of counter-terrorism and protecting military operations abroad.

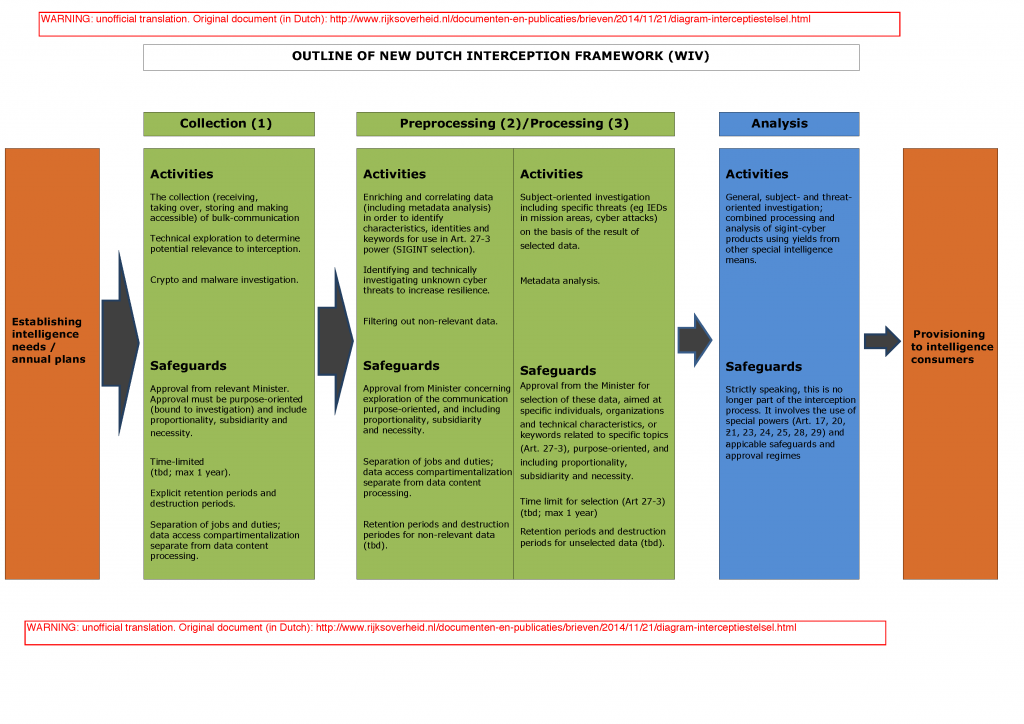

The upcoming bill to change the Wiv2002 seeks to expand the non-specific interception power to cable communications (e.g. fibers and switches of ISPs and telcos), and will include a new interception framework — explained in the post here, and depicted in the diagram below (copied from that earlier post).

The bill will eliminate the word “non-specific” (Dutch: “ongericht”) from the law, and introduces the requirement that such interception must be bound a priori to a specific investigation (which can be long-running), i.e., purpose-oriented (Dutch: “doelgericht”; alternative English translations could include: “objective-oriented” or “goal-oriented”; but preferably not “target-oriented“ because that may be wrongfully interpreted here as being aimed at a target person or organization, which is not the case). Whatever the lingo, it constitutes power to search and select bulk communications — the latter term is also literally mentioned in the government’s diagram (.pdf, in Dutch; see ‘Collection’ phase) of the draft interception framework. As is the current situation, the intelligence services will send requests for approval to the Minister, the Minister decides, and then it is up to the Dutch Review Committee on the Intelligence & Security Services (CTIVD) to afterwards examine whether the activities were lawful.

In the proposed regime, the purpose must be defined increasingly specific depending on the phase of the new interception framework. In the collection phase, the purpose can be defined broader/vaguer, in the preprocessing phase the purpose must be more specific, and in the processing phase and analysis it must be most specific. Collection and preprocessing will be authorized without having to specify persons, organizations and/or technical characteristics; processing and analysis do require such specification. Obvously, the collection and preprocessing phases are most interesting from the perspective of protecting legal and moral rights of non-targets, as those activities are authorized without specifying persons, organizations or technical characteristics. Here is an overview of the activities and safeguards per phase:

- COLLECTION PHASE:

- Activities:

- Receiving bulk communications

- Storing bulk communications

- Making intercepts ‘accessible’ for processing or preprocessing

- Safeguards:

- Approval from Minister, based on purpose-orientation, necessity, proportionality and subsidiarity

- Time-limited (tbd; max 1 year)

- Explicit retention and destruction periods

- Separation of jobs and duties: data access compartments separate from data content processing

- Activities:

- PREPROCESSING PHASE:

- Activities:

- Enriching and correlating of data (metadata analysis) in order to identify technical characteristics, identities and keywords for use in Art.27-3 power (Sigint selection)

- Identifying and technically investigating unknown cyber threats to increase resilience

- Filtering out non-relevant data

- Safeguards:

- Approval from Minister for exploration of the communication (Sigint search), based on purpose-orientation, necessity, proportionality and subsidiarity

- Separation of jobs and duties: data access compartments separate from data content processing

- Explicit retention and destruction periods for non-relevant data (tbd)

- Activities:

- PROCESSING PHASE:

- Activities:

- Subject-oriented investigation (“subject” meaning specified persons, organizations and technical characteristics) including specific threats (eg IEDs in mission areas or cyber attacks) on the basis of the result of selected data

- Metadata analysis

- Safeguards:

- Approval from Minister for selection of these data, aimed at specific individuals, organizations and technical characteristics, or keywords related to specific topics (Art.27-3), purpose-oriented, and including necessity, proportionality and subsidiarity

- Time limit for selection (Art.27-3) (tbd; max 1 year)

- Explicit retention and destruction periods for unselected data (tbd)

- Activities:

- ANALYSIS PHASE:

- Activities:

- General investigation, subject-oriented investigation, threat-oriented investigation; combined processing and analysis of Sigint-cyber products using yields from other special intelligence means

- Safeguards:

- Strictly speaking, the analysis phase is longer part of the interception process. This phase involves the use of special powers (Art. 17, 20, 21, 23, 24, 25, 28, 29) and applicable safeguards and approval regimes.

- Activities:

The output of the analysis phase is then provided to intelligence consumers. Note that “metadata analysis” (mentioned in both preprocessing and processing phases) can be purely technical (preprocessing phase?), or aimed at identifying subjects and patterns, for instance by linking to other databases such as CIOT, a centralized telco/ISP subscriber database, to look up persons associated with an IP address or phone number (processing phase?).

The requirements of necessity, proportionality and subsidiarity are mentioned repeatedly — but those requirements already apply, and that various CTIVD oversight reports show the requirements are typically not all being met in the practice of Sigint in recent years (2009-current, with 2009 being the first year the CTIVD published an oversight report addressing the use of Sigint powers). That problem is one of the reasons for establishing a new interception framework: grouping activities into phases that have a separate authorization requirement tailors authorizations to specific types of access to and use of (bulk) data. The obvious key question is: how will this interception framework turn out in practice? What lower-bound restriction will apply to the characteristics of the definition of “purpose” in each phase, including the collection phase, in order for the Minister to accept it? And the CTIVD?

For instance, could the Minister — hypothetically — authorize the collection (or for that matter manipulation or disruption) of any or all Tor traffic (e.g. all Tor traffic routed in/via the Netherlands) as part of a (perhaps multi-nation (.pdf)) effort to deanonymize Tor users? The upcoming bill is expected to permit the government to require internet providers and telecom providers to provide access to the communications routed over their cables (might include fiber taps, port mirrors, etc.). Will it be legally possible to copy all AMS-IX-routed Tor traffic to the JSCU and/or foreign partners? Legitimate purposes might include identification or sources behind Tor-anonymized cyber attacks, terrorist propaganda, or trade in precursor chemicals. In this hypothetical case, will the anonymity of the mass of non-targets among the Tor users, and public trust in the Tor network, have weight in the decision to authorize a particular operation that affects them?

It is not hard to think of specific legitimate applications of bulk-style interception (search & select), but — taking into account the law of unintended consequences and the tendency of weasel-like use of language — we should also explore the theoretical limits of the upcoming bill. This can be done by dreaming up hypothetical (but realistic) scenarios in which privacy and trust (in infrastructure) are infringed upon, and then figuring out under what circumstances or conditions each scenario would or would not be lawful under the proposed legislation. To cite from a new CoE report (.pdf, June 2015) on democratic oversight on security services (the Dutch services both are intelligence and security services):

Security service activities impact a variety of human rights, including the right to life, to personal liberty and security, and the prohibition of torture or inhuman, cruel and degrading treatment. They also impinge on the right to privacy and family life, as well as the rights to freedom of expression, association and assembly, and fair trial. It is therefore crucial that security services uphold the rule of law and human rights in undertaking their tasks.

First, recall that under codename “Argo II”, the Ministry of Defense acquired EUR 17M worth of equipment for processing Sigint, allegedly (primarily?) Sigint related to “the world of the internet protocol” (IP traffic). The equipment is used by both AIVD and MIVD, and replaces existing systems. That’s what’s publicly known. The precise applications of Argo II are not publicly known, but it wouldn’t be a leap of faith to conjecture that 1) the equipment performs Sigint search (preprocessing phase) and selection (processing phase) of bulk-intercepted data (e.g. text, audio, video, images, and/or telemetry), based on keywords, names of persons, names of organizations, and/or technical characteristics, and that 2) the equipment is likely fed live data streams more or less straight from bulk-interception sources in Eibergen (radio), Burum (satellite), and if the bill is adopted, taps at Dutch ISPs of the government’s choice (likely at least the largest ISPs/telcos; and perhaps small(er) ISPs/telcos that have links of strategic value to Dutch intelligence). Presumably, it will be proposed to make it legally possible to place taps between data centers; but the cabinet in November 2014 did state (.pdf, in Dutch) that the services would not get “unrestricted and independent access” (Dutch: “onbeperkte en zelfstandige toegang”) to cables: no clandestine access — only access by legal coercion (we’ll see how the law arranges that).

Next, recall what the first Dutch Defense Cyber Strategy (2012) said about expanding the MIVDs capabilities for covert gathering of information in cyberspace:

“This includes infiltration of computers and networks to acquire data, mapping out relevant sections of cyberspace, monitoring vital networks, and gaining a profound understanding of the functioning of and technology behind offensive cyber assets. The gathered information will be used for early-warning intelligence products, the composition of a cyber threat picture, enhancing the intelligence production in general, and conducting counterintelligence activities. Cyber intelligence capabilities cannot be regarded in isolation from intelligence capabilities such as signals intelligence (SIGINT), human intelligence (HUMINT) and the [MIVD]’s existing counterintelligence capability.”

Next, inspire creativity by changing mindset to “Collect it All,” “Process it All,” “Exploit it All,” “Partner it All,” “Sniff it All” and “Know it All”. Make sure you’ve read up on the Snowden leaks here (handy chart), here, here and here, and on the ANT Catalog here. Think of vulnerabilities and strengths in the current design, implementations and configurations of IPv4/IPv6, TLS, IPSec, HTTP(/2), SMTP, DNS, BGP, Tor/I2P, etc.. Think what increasing use of cryptography means to obtaining access to data that’s encrypted in storage (e.g. FDE; non-backdoored/flawed design? non-backdoored/flawed implementation? no useful cryptanalysis possible? can’t rubber-hose the key w/o target detecting they are a target?), in transfer, and perhaps in the future, data when processed (idem for homomorphic crypto). Think of (im)possibilities concerning traffic analysis (correlation attacks), cryptanalysis and attacking keys and end-devices. Read books on intelligence. Read annual reports of the intelligence services, and read the CTIVD’s oversight reports. Read openly advertised job positions at the intelligence services. Read relevant parliamentary papers. Take note of the topics mentioned by the Minister of Defense during the debate of February 10th 2015 about the upcoming bill:

- cyber threats cannot be identified timely;

- Dutch military personnel abroad is probably less protected and supported (the Minister added that cable networks are increasingly used in mission areas and conflict zones);

- terrorist activities may not be identified timely;

- the true intentions of risk countries who may be seeking WMDs will remain hidden (the Minister added, with strong seriousness in voice and facial expression, that we lost insight into activities of countries possibly seeking WMDs, because those countries changed to cable communications);

- we are not able to quickly build an information position in upcoming crises abroad;

- theft of intellectual property, vital economical information, and state secrets goes unnoticed.

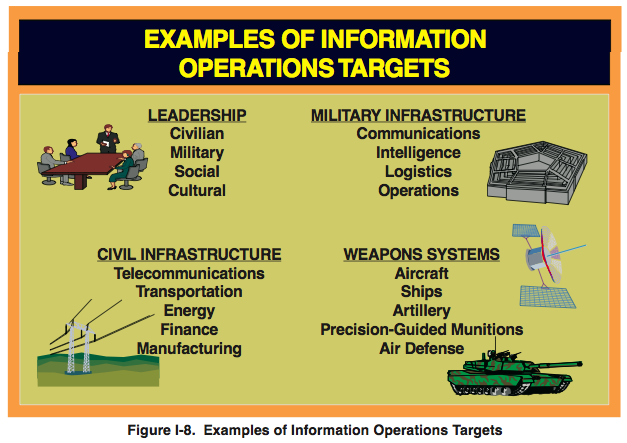

Think how strategic objectives are translated to tactical and operational objectives (strategy-to-task planning). Make a list of domestic and foreign interests (political, military, economical, etc.); and who the intelligence consumers might be (decision-makers in the cabinet, ministries — see UK’s relations between GCHQ and ministries (.pdf) –, vital sectors, military, customs, etc.). Dream up hypothetical (but plausible) domestic and foreign intelligence objectives (AIVD). Then dream up hypothetical (but plausible) military Information Operations (IO) objectives (MIVD) using the picture below, taken from the initial version of Joint Publication (JP) 3-13 (.pdf, 1998). Think of both defensive and offensive objectives. Draw high-level attack trees. Visualize intelligence cycles happening at the strategic, tactical and operational level. It’s a lot of effort, but should yield some appreciation of what intelligence is about.

(Note: IO objectives can be pursued by any military means available and do not always depend on interception, certainly not interception alone; IO is an all-source paradigm. Humint, Osint etc. must be taken into account. Ask yourself: what data/information could be needed precisely? What are plausible sources and methods to acquire it? What are the advantages and disadvantages of each method? What communication links are used, which ones should you target, how do you gain access to them? Where is data stored, how do you gain access to it? Etc. Also take into account the qualities, limitations and problems of Sigint.)

To assess the quality of legislation, apply the Dutch government’s own normative framework, entitled “Integraal Afwegingskader beleid en regelgeving” (IAK; in Dutch). The IAK is commissioned and applied by the government itself to evaluate and improve legislative quality, but it would be foolish to assume its outcome is flawless legislation, or even the best possible alternative. After all, politics remain involved. The IAK can be used as a rich source of questions to ask about the legislative quality of the upcoming bill. See the IAK leaflet (.pdf, in Dutch) for a quick overview; non-Dutch readers may get some idea by reading an early publication about this topic: Coping with Uncertainty – A Framework for Evaluation of Legislation (.doc, 2010, Veerman & Mulder). The IAK is commissioned and used by the government, but can be used by anyone to scrutinize legislation. (Again, lots of reading is involved.)

To assess the ethics of intelligence collection, apply Ross Bellaby’s Just Intelligence Principles (2012). Bellaby defines six principles to assess the ethics of intelligence collection. The principles can guide the process of seeking a balance between interests of collecting intelligence and interests of protecting physical and mental integrity, autonomy, liberty, human dignity and privacy — the latter interests being vital human interests, according to Bellaby. (And if a proper balance cannot be struck, the proposed collection should not take place: necessity does not imply proportionality.) These are Bellaby’s just intelligence principles:

- Just cause: there must be a sufficient threat to justify the harm that might be caused by the intelligence collection activity.

“Thomas Aquinas argued that for a war to be just there must be some reason or injury to give cause, namely that ‘those who are attacked must be attacked because they deserve it on account of some fault’. Currently, international law frames ‘self-defence’ as the main justification for going to war.”

- Authority: there must be legitimate authority, representing the political community’s interests, sanctioning the activity.

“For a war to be considered morally permissible according to the just war tradition it must be authorized by the right authority, that is, those who have the right to command by virtue of their position. As Aquinas stated, ‘the ruler for whom the war is to be fought must have the authority to do so’ and ‘a private person does not have the right to make war’. (…) Similarly, one can argue that in order for intelligence collection to be just, there must be a legitimate authority present to sanction the harms that can be caused.”

- Intention: the means should be used for the intended purpose and not for other (political, economic, social) objectives.

“Leaders must be able to justify their decisions, noting that they had the right intentions; ‘for those that slip the dogs of war, it is not sufficient that things turn out for the best’.”

“Another implication of this principle is reflected in the current debate on personal information databases and how crossover information collection should be restricted. If information is collected – DNA, fingerprints, personal data for example – under a just cause with the appropriate degree of evidence, but was incidentally connected to another crime, then the information can be used since the original just cause and correct intention was present. This would be analogous to finding illegal goods incidentally while performing a legal search. However, what is not permissible is to use a just cause such as tax fraud to justify the collection and retention of DNA, as this type of information is unrelated and is not reflecting the original just cause, clearly outside what should be the correct intention.”

- Proportion: the harm that is perceived to be caused should be outweighed by the perceived gains.

“One can argue that, for the intelligence collection to be just, the level of harm that one perceives to be caused, or prevented, by the collection should be outweighed by the perceived gains.”

- Last resort [=subsidiarity]: less harmful acts should be attempted before more harmful ones are chosen.

“In order for an intelligence collection means to be just, it must only be used once other less or none harmful means have been exhausted or are redundant.”

- Discrimination: There should be discrimination between legitimate and illegitimate targets.

“The principle of discrimination for the just intelligence principles therefore distinguishes between those individuals without involvement in a threat (and thereby protected), and those who have made themselves a part of the threat (and by so doing have become legitimate targets). According to the degree to which an individual has assimilated himself, either through making himself a threat or acting in a manner that forfeits his rights, the level of harm which can be used against him will alter.”

In the proposed Dutch interception framework, the collection phase and preprocessing phase require purpose-orientation, necessity, proportionality and subsidiarity, but the interception is (in some cases likely necessarily) authorized without specifying persons, organizations or technical characteristics. One wonders how the Dutch intelligence laywers, the Minister and the CTIVD would reflect on experiments such as GCHQ’s OPTIC NERVE, in which GCHQ collected webcam images from 1.8 million Yahoo webcam users during a six-month run. It is a case of Sigint search applied to cyberspace. OPTIC NERVE certainly violates Bellaby’s principle of discrimination, and is at odds with the principle of proportion; but Bellaby’s other principles could still be satisfied. Would it be lawful, under the to-be-proposed legislation, for Dutch intelligence to carry out a program like OPTIC NERVE? In the eyes of the intelligence service and the Minister? In the eyes of the CTIVD? Can we anticipate (other?) potential gaps between law and ethics? How about opportunistically, indiscriminately collecting and preprocessing IKE and RSA key exchanges on a large scale, just in case it might be useful in the future for the authorized (broad?) purposes?

One particularly interesting category might be non-specific domestic interception: whereas the Wiv2002 limits non-specific interception to communications that have at least either a foreign source or a foreign destination, it is implausible that the Dutch government will uphold that limitation in the internet age. If eliminated, a strict legal barrier to non-specific domestic interception disappears. And considering the nature of, e.g., jihad-related activity — “swarm dynamics” as the General Intelligence & Security Service (AIVD) put it — certain forms of domestic surveillance can be expected. We’ll find out when the government submits the bill to the House of Representatives, which is any day now.

Further issues to keep in mind are lawyers and journalists, who’s metadata and contents may be searched and/or collected as part of activities in the collection and preprocessing phases. The different authorization levels and separation of jobs and duties are nice, but not foolproof.

Note that the hacking power (Article 24) is separate from the interception framework. There are no CTIVD oversight reports that substantially review uses of Article 24, but from oversight report 39 (.pdf, 2014; about the AIVD’s activities concerning social media, in the period 2011-2014) it is clear that the CTIVD interprets the hacking power to be as a specific power (as opposed to non-specific). It is not clear how the CTIVD would distinguish between placing spyware in a smartphone, or chaining a series of hacks against non-targets to obtain access to a target, or placing spyware inside shared infrastructure (ISPs, telcos, data centers, CDNs, etc.) to enable (bulk?) interception. Think of GCHQ’s plans concerning Belgacom, and programs such as NSA’s QUANTUM INSERT (MitM attacks) and TURBINE (large-scale malware implants). And concerning PRISM it is reported that “some XKeyscore assets are actually compromised third-party services that are queried in place and the results exfiltrated”. (I’m not saying this is unacceptable by definition; I’m saying that a more comprehensive legal framework may be necessary to appropriately regulate the use of hacking powers for such purposes.) And then there’s reconnaissance activities. Port-scanning public IP addresses is hardly infringing (data such as collected in HACIENDA can nowadays be found in the open at Shodan, Scans.io, etc.), but using spyware to gain access to software and hardware configurations (servers? routers? PLCs?), or to pivot access to internal infrastructure, surely is infringing. Is Article 24 — and thus its safeguards — triggered in all circumstances that it should? [UPDATE 2015-07-02: the answer is probably ‘yes’. The draft bill has been published, and it contains a separate paragraph on reconnaissance. From the bill’s MoU it is clear that the permission to perform reconnaissance itself does not cover permission to hack, and hacking will require prior approval from the Minister for hacking.]

One might argue that Dutch intelligence will never plan to carry out programs in a way like PRISM, QUANTUM INSERT or TURBINE (and what have you) because Dutch intelligence is not like GCHQ and NSA — at least not historically (.pdf) — in terms of privacy laws, human rights concerns and legal standards; and of course the smaller Dutch budget. But acquiring access to (possibly-)relevant communications, preferably in cleartext, is one of the core tasks of the JSCU; the Netherlands has relations with NSA (example) and GCHQ (example); and indeed, malware implants and access to shared infrastructure may prove necessary to circumvent cryptography, assuming that the world won’t decide to ban strong cryptography, or to voluntarily or by legal coercion handover cryptographic keys. How will necessity, proportionality and subsidiarity of CNE and CNA be weighed by the intelligence service’s lawyers, the Minister, and the CTIVD? From CTIVD oversight report 39 it is known that bits of unlawfulness took place in the AIVD’s acquisition of web fora: in four cases, the CTIVD found that the AIVD acquired the data of a web forum with large portions of non-target members, and concluded it was disproportionate and thus unlawful. The assessment and monitoring of hacking activities is a point of attention; we’ll hopefully learn more about it during upcoming debates and from future oversight reports.

Citing from page 58 of Aidan Wills’ report Democratic and effective oversight of national security services (.pdf, June 2015), prepared for the Council of Europe:

(…) security service managers and their staff play the leading role in ensuring that their activities are lawful and comply with human rights. It is individual members of security services, not external overseers, who are present when many decisions with important human rights implications are made. For this reason, the values, ethics and legal knowledge of security service personnel is of utmost importance. With this in mind, security service managers have to implement robust selection vetting criteria to ensure that they only recruit people with appropriate values. They also need to ensure that ongoing training is provided, including on human rights issues (Venice Commission 2007: § 132) and on the role played by external oversight bodies. It is essential that external oversight bodies scrutinise these internal policies and practices of security services.

Let’s recall a statement from CTIVD oversight report 28 (.pdf, 2011):

The Committee found that not all persons dealing with the processing of Sigint on a daily basis, appreciate the infringement [on the protection of personal life] made by this means.

This statement does not imply that the person or persons referred to violated any rule, nor that this attitude exists throughout a larger part of the workplace, i.e. that it would be culture (although group-think might exist some of the time, as it might anywhere). In fact, the CTIVD reports show that on non-sigint issues, the Dutch intelligence services typically use their legal powers in a heedful and lawful way, including the specific interception power that affects specific persons, organizations or technical characteristics. Still, it is worth noting that the CTIVD included that statement in its oversight report. One can’t prevent every possible insider threat (LOVEINT etc.): intelligence personnel are humans too. Also, desensitization to privacy infringement — or not really being sensitized to begin with — seems plausible if employed in intelligence (but quod gratis asseritur, gratis negatur); it the end it depends on individual characters and MICE.