Similar to previous oversight reports concerning Article 25 Wiv2002 (targeted interception), the new oversight report confirms that the AIVD generally uses Article 25 Wiv2002 (targeted interception) carefully and heedfully (in a legal sense, as evaluated within the framework of Dutch law). In individual cases, issues exist that the CTIVD states to be careless or illegal; for instance cases where privileged persons are tapped (lawyers, doctors).

Similar to previous oversight reports concerning Article 27 Wiv2002 (sigint selection by keywords, by identity of person or organization, and/or by telecommunication characteristics), the new oversight report confirms that the AIVD often acts careless (in the legal sense), and that the legally required motivation for the use of the power (necessity, proportionally, subsidiarity) is often insufficient. Strangely, up to 2013, the CTIVD systematically withheld itself from judging such practices to be illegal, even though the practice obviously did not comply with the law: proper motivation is a legal requirement, and that requirement has largely not been met for years.

As of January 1st 2014, two out of the three committee positions within the CTIVD are held by new persons. Former Chairman of Attorneys General Harm Brouwer replaced Bert van Delden as chair, and former Rotterdam police chief Aad Meijboom replaced Eppo van Hoorn, who resigned in Q3/2013. The third person is formerpublic prosecutor Liesbeth Horstink-Von Meyenfeld: she joined in 2009, and will be legally required to resign or be reappointed in 2015. (After an insanely complex selection process, a position is filled by Royal Decree for a six year period, and members can be reappointed once.) As shown below, the “new” CTIVD has turned out to be willing to conclude that lack of legally required motivation constitutes illegality. This seemingly changed standpoint solves one part of the oversight problem. The next question is: does the practice change in reality? This remains to be seen; the CTIVD itself has no formal means to intervene with AIVD activities. This is left up to the Minister, who typically defends the AIVD and who probably doesn’t spend a lot of time critically assessing the request for permission to interception, and to the Parliament, which historically showed scarce interest in intelligence — this has only slightly changed since Snowden.

Interestingly, the CTIVD decided to disclose statistics concerning the use of Article 25 Wiv2002 and Article 27 Wiv2002 in the report, and the Minister of the Interior chose to censored the statistics in the final publication. This is basically offending the CTIVD. Dutch readers should read this and this. I suggest that the CTIVD in response initiates an investigation into the use of Article 27 Wiv2002 to select by keywords. No oversight report is yet available that addresses this in length. The Minister of the Interior annually approves a list of topics for sigint selection by keywords; the keywords are then chosen by the AIVD itself. One always wonders about the dynamics of keyword-based surveillance. For instance, whether the keywords are limited to, say, export-controlled chemicals, or that the AIVD also selects using general keywords (“bomb”); and also, what the thresholds and conditions are for someone (e.g. an activist) to become a person of interest to the AIVD’s a-task (National Security) or d-task (Foreign Intelligence).

The remainder of this post is a translation of the part of the new oversight report that specifically addresses sigint selection ex Article 27 Wiv2002:

12 Usage of the power to select sigint

12.1 Introduction

A request for approval for the use of the power to select sigint consists of two parts. First, the motivation for using the power. This details the specific investigation within which the power is used, and needs to discuss aspects of necessity, proportionality and subsidiarity. Second, the motivation has an appendix that lists the telecommunication characteristics (hereafter: list of selectors). The list of selectors describes the telecommunication characteristics that will be used as selectors (for instance, name of person or organization, phone numbers, email addresses). In the list of selectors, a column is included with a (very) brief description of the reason the selector is included. This description can also be a reference to the AIVD’s internal information system. The list of selectors is sent to the Minister of the Interior for approval, together with a summary of the motivation. Similar to the use of the interception power [ex Article 25 Wiv2002], approval can be given for a period of up to three months. The CTIVD has examined both the motivation of the use of the power to select sigint, and the (justification for the) list of selectors.

12.2 Selection of sigint in previous oversight reports

In oversight report 19, the CTIVD found that the AIVD did not deal with sigint selection in a careful manner. Often it was not explained whom the numbers and other telecommunication characteristics belong to, or why this telecommunication needed to be selected. The CTIVD concluded that it had insufficient knowledge about the motivation of the selection, and thereby could not judge whether the power was used in a legal manner in accordance with Article 27 Wiv2002. The CTIVD urgently recommended that requests for the use or for the extension of the use the sigint selection power should include a specific motivation. The Minister of the Interior responded by stating he agreed with the CTIVD, but also expressed worries about the practical feasibility of that recommendation. The Minister agreed to further consult with the CTIVD on this matter.

In oversight report 26, concerning the AIVD’s foreign intelligence task, the CTIVD found that in the application of Article 27 Wiv2002 for foreign intelligence purposes, in many cases it still was not specified whom a characteristic belonged to and why it is important to selection the information that can be acquired through this specific characteristic. The CTIVD did notice, however, that once sigint operations ran for a longer period, the AIVD was better able to explain whom telecommunication characteristics belong to, and could better argue why the use of the power was justified against these persons. The CTIVD emphasized that the AIVD seriously must strive to better specify against which person or organization sigint is used.

In oversight report 28, concerning the use of sigint by the Military Intelligence & Security Service (MIVD), the CTIVD has further elaborated the legal framework for the entire procedure of the processing of sigint. In that report the CTIVD once again concluded, this time concerning the MIVD, that it could not judge whether the use of sigint was legal in accordance with Article 27 Wiv2002 because the CTIVD had insufficient knowledge about the motivation. In oversight report 38, the CTIVD repeated her earlier findings.

In oversight report 35, the CTIVD has examined one specific operation that involved selection of sigint, and judged aspects of to be illegal.

The current investigation constitutes the first time since oversight report 19 that the CTIVD has assessed the legality of the use of sigint selection in general concerning the AIVD.

12.3 Search for the purpose of selection in previous oversight reports

In certain cases the AIVD uses its sigint search power [ex Article 26 Wiv2002] prior to sigint selection [ex Article 27 Wiv2002]. The AIVD hereby aims to identify whether a relevant person of interest is present within the communication intercepted in bulk. In this case, the AIVD attempts to establish the identity of the person, and whether a relation exists to the field of investigation. This constitutes search for the purpose of selection. The use of sigint search supports better targeted sigint selection.

In oversight report 28, the CTIVD described the practice of sigint search by the MIVD, and in oversight report 38 the CTIVD repeated its conclusions. The CTIVD distinguishes three forms of search aimed at selection, that involve taking note of the contents of communication. In short, this involved the following forms:

- Searching bulk intercepts to determine whether Ministerially approved selectors can in fact generate the desired information;

- Searching bulk intercepts to identify or describe potential `targets’;

- Searching bulk intercepts for data that, in the context of an anticipated new area of investigation, future selectors can be retrieved from.

In oversight report 28, the CTIVD only found the first form of sigint search for the purpose of sigint selection to be legal, because only that form has safeguards for privacy infringement, that is, through the prior approval of the Minister to use sigint against the person or organization involved. This use of sigint search supports the sigint selection for which permission was obtained. This is necessary because Article 13 of the Dutch Constitution requires authorization by a competent body prior to infringements of phone secrecy and telegraph secrecy. The CTIVD finds the second and third form of search to be illegal, because it has no legal ground, and privacy infringement is not safeguarded by the requirement of Ministerial permission prior to using sigint selection against a person or organization.

The CTIVD left the legislator to consider whether it is necessary that the MIVD (and AIVD) be granted the power to search for the purpose of selection, taking into account the right to privacy. In his response, the Minister of Defense stated that he would cooperate with the Minister of the Interior to establish a future-proof legal framework. During the General Meeting that addressed, among others, oversight report 28, the Minister of Defense stated that he agrees with the CTIVD’s conclusion considering the sigint search power, but that while waiting for the intended change of law, the current practice, for reasons of national security, will continue. In their response to oversight report 38, both Ministers stated that the practice that was found to be illegal, will be taken into account in an intended change of law. During a plenary meeting on eavesdropping by the NSA, that addressed oversight report 38, the Minister of Defense stated that the third form of sigint search has stopped, and repeated that the MIVD continues to carry out the second form of search while waiting for a change of law. In oversight report 38, the CTIVD announced that it will address sigint search by the AIVD in the ongoing investigation into the use of interception power [ex Article 25 Wiv2002] and the power to select sigint [ex Article 27 Wiv2002] by the AIVD.

12.4 Methods of AIVD concerning search for the purpose of selection

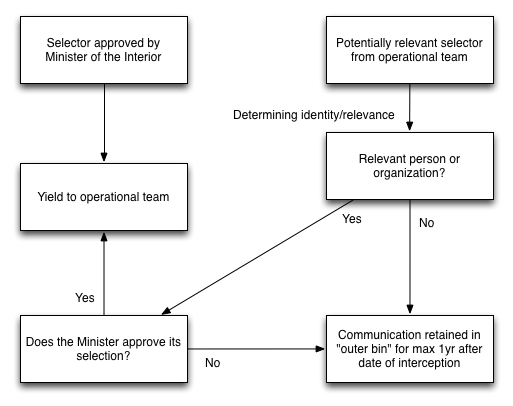

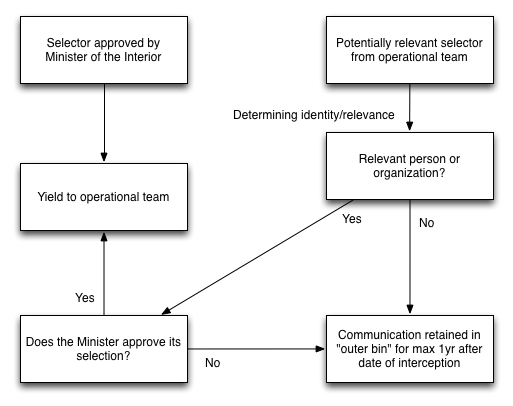

The CTIVD has taken notice of an internal method of the AIVD concerning the use of sigint search and sigint selection powers. This method is set out in writing and approved by management. The method provides for the possibility that an operational team examines whether a telecommunication characteristic, such as a phone number or email address, that no Ministerial permission is available for yet, is relevant to the investigation. The method is aimed as establishing the identity of the communicating party associated with this telecommunication characteristic. The CTIVD noticed that the AIVD interprets the identification of the communicating party in a broader sense than only establishing the name of the involved party. The AIVD also assesses whether the party is relevant for the investigation carried out by the operational team. One can think of establishing that a person has a certain function, relevant to the investigation, within an organization.

The department that facilitaties bulk interception, can at the request of, and in cooperation with, the operational team carry out a metadata analysis to determine how the telecommunication characteristics relates to other persons and organizations that are included in the investigation (who/what has contact with who, how long, how often, from what location, etc.). To establish the identity of the communication parties and determine their relevancy for the AIVD’s investigation, it can be necessary to also take note of the contents of communication. The possibility is then offered to examine the nature of the communication that has already been intercepted. This can involve stored bulk intercepts for which no Ministerial permission has yet been obtained to select from. It can also involve communication that has been previously selected from the bulk, and is thus already accessible by the team; or information that is in the possession of the AIVD through other (special) powers, such as hacking or acquisition of a database.

The team’s data processor is given the opportunity to briefly see (or hear) the contents of the communication that can be related to the telecommunication characteristic to determine whether the telecommunication characteristic is relevant. This allows the team to determine whether it is useful to obtain permission and include the telecommunication characteristic on the list of selectors so that the communications can be fully known to the team. The CTIVD understood that not all teams use this method equally.

According to the AIVD’s internal policy, it is not intended that the processor exploits the information obtained without obtaining permission from the Minister.

The CTIVD depicts this method as follows.

12.5 Assessment of the methods

The CTIVD considers the question whether the AIVD’s method is legal.

Insofar the method is used concerning telecommunication characteristics that Ministerial permissions was obtained for, the CTIVD finds the method to be legal, because the privacy infringement is safeguarded by the Minister’s permission. The CTIVD also considers this method legal concerning the MIVD.

The CTIVD finds the AIVD’s method, insofar it concerns telecommunication characteristics that the Minister has not yet approved, is equivalent to the second form of sigint search described in section 12.3. The CTIVD thus concludes that this method is illegal.

This conclusion will be upheld as long as the anticipated legislation does not yet exist.

The CTIVD notes that the Minister of Defense, under the announcement of an intended change of law – in consultation with Parliament – stated that the MIVD’s practice will continue. Although the Minister of Defense only mentioned the MIVD, the CTIVD notes that the AIVD, that applies the same method, awaiting a change of law, also continues with this practice. Now that this is the current practice, the CTIVD considers it to be important to evaluate the compatibility between the practice and the right to privacy.

The CTIVD notes that the AIVD uses this method for the purpose of carefully establishing of list of selectors, and avoid that unnecessary permissions are requested. The CTIVD recognizes that the described method can support this. Moreover, the CTIVD can conceive of the possibility that this also supports implementation of the previous recommendation to improve the motivation of sigint selection. The CTIVD also expects that this could result in fewer infringement on the right to privacy, because the pre-investigation allows a more targeted use of sigint.

The right to privacy requires that the following safeguards are present:

- The only purpose of briefly peruse the contents of communication can be the determination of the identity of communicating parties and the relevance of the communication to the ongoing investigation. Other use of is not permitted until permission has been obtained from the Minister. A requirement for briefly peruse of contents of communication is that an adequate separation of duties exists between the department that facilitates bulk interception and the operational team, in that sense that the operational does not itself obtain access to the communication.

- To ensure this separation of duties, it is important to provide an adequate written registration and reporting of having peruses the communication. It must be recorded what communication has been seen/heard, and what the outcome was. The CTIVD consides this registration and reporting to be important for internal accountability and external control, as well as for carefulness.

The CTIVD notes that the second safeguard is currently insufficiently implemented by the AIVD. The CTIVD notes that insufficient reporting is performed on what communication has been seen/heard and what the outcome was.

12.6 Usage of the sigint selection power

The CTIVD has examined the AIVD operations that between September 2012 and August 2013 involved the use of sigint selection. Concerning the examined operations, the CTIVD has several remarks. Insofar necessary this is elaborated on in the classified appendix.

The CTIVD finds that sigint selection is used in varying ways by the various operational teams of the AIVD. Specifically, a difference is seen between the teams of the National Security unit and the teams of the Foreign Intelligence unit. The use of this power by Foreign Intelligence teams is generally broader, as can be observed in the size of the list of selectors. The operations of the National Security teams are largely more targeted. Considering that the legal definition of the a-task [National Security] is focussed on individual persons and organizations, and the d-task [Foreign Intelligence] provides for carrying out investigations on countries, this is not surprising. The CTIVD finds that the lists of selectors vary in size between several selectors to thousands selectors.

The CTIVD notes that for each person or organization that emerges during the investigation, it must be motivated why sigint selection is necessary. In must be stated what the purpose of the sigint selection is within the context of the investigation, and what the grounds are for the expectation that yields of selection will contribute to that purpose. There hence must be a link between the broader investigation that is carried out and the necessity of the selection of communication from the specific person or organization. This is different for every person or organization.

In a request for extension, the yields of the selection and the added value to the investigation must be considered, not in general terms but specific to the person or organization. General statements that the use of the special power contributed to the intelligence need, or resulted in (unspecified) reports, or confirmed current believes, are insufficient. In addition to necessity, a request for permission must state in what way the requirements of proportionality and subsidiarity are met. The CTIVD notes that absence of yields can be a result of the nature of sigint. Communication can possibly not be intercepted because of the range [sic] of the satellite dishes. The CTIVD is of the opinion that it is permissible to uphold [selection by] telecommunication characteristics as long as periodically it is reconsidered whether upholding the characteristics still complies with the legal requirements for the use of the power, and that this consideration is written down. Insofar the selection of certain telecommunication characteristics yielded communication but this communication turned out to be irrelevant, the AIVD must remove the characteristics from the list of selectors that permission is requested for.

The CTIVD finds that the extent to which the motivation for use of the power to select sigint established a framework from which it can be foreseen which persons or organizations are within the scope of the operation, varies from operation to operation. In certain operations it is made clear whom the AIVD is interested in, and the motivation clearly states which persons and organizations are within the scope of the operation. The CTIVD notes that it is important that the motivation provides sufficient clarity about which persons and organizations can be selected, under which conditions, and why. A direct and clear link must exist between the motivation and the persons and organizations that are included in the list of selectors. The CTIVD finds that this link is absent in three operations, or made insufficiently clear by the AIVD. The CTIVD finds this to be illegal. Moreover, the CTIVD finds that in one operation, non-limitative enumeration are used in the motivation. An example of this is the phrase “persons and institutions such as [.], et cetera”. The CTIVD finds this to be illegal.

The CTIVD finds that in two operations, persons or organizations with a special status (e.g. non-targets, sources, occupations that have professional secrecy) were included on the list of selectors, without specific attention being paid on this in the motivation. This CTIVD notes that special categories of persons or organizations must be explicitly mentioned in the motivation if they are included on the list of selectors, and that attention must be paid to the legal requirements of necessity, proportionality and subsidiarity in relation to these telecommunication characteristics. The CTIVD notes that it is not, or insufficiently, apparent what considerations were made concerning these requirements. Considering the special status of these persons or organizations, the CTIVD find this to be illegal. A number of telecommunicatino characteristics are related to a person with whom is being cooperated by the AIVD, and whose interception [ex Article 25 Wiv2002] by the AIVD is found to be illegal by the CTIVD. The CTIVD also finds the sigint selection against this person to be illegal.

The CTIVD finds that in the motivation that the Minister based his approval for, in two cases no attention was paid to the requirements of necessity, proportionality and subsidiarity. In the internal motivation, the AIVD does pay sufficient attention to this. The CTIVD finds this to be careless. Although the AIVD is not required to provide the Minister an exhaustive motivation considering necessity, proportionality and subsidiarity, the CTIVD notes that the permission request must provide sufficient clarity about the considerations to allow the Minister to assess the request.

The CTIVD finds that the AIVD in multiple cases incorrectly explains the requirements of proportionality and subsidiarity. Deliberating on subsidiarity, for instance, in one operation the AIVD stated that sigint selection “could possibly yield change for exchange with other foreign agencies”. In a different operation, it has been stated sigint selection allows the AIVD to “carry out investigations in a relatively simple and efficient manner, involving limited risks”. The CTIVD notes that this does not constitute a correct deliberation in terms of the requirements of proportionality and subsidiarity. Correct deliberation on proportionality implies, after all, a deliberation on interests that explicitly involves the interests of the target. This also applies to the requirement of subsidiarity, on the basis of which the AIVD is required to use the means that are least infringing on rights. The CTIVD finds that the outcome of this deliberation can result in the AIVD having to use an inefficient and relatively complex means. The CTIVD did not find evidence that indicates that the operations involved do not meet these requirements. The CTIVD thereby finds that the motivation by the AIVD is lacking and thus careless, but not illegal.

The way in which the list of selectors is structured, varies from team to team. The list of selectors includes a (very) brief motivation of why the characteristic is included. The CTIVD finds that the motivation in various operations is done in very different ways. The CTIVD observed cases in which the list of selectors refers to an internal document of the AIVD that explains the relevance of the characteristic to the operation. In addition, the CTIVD observed cases in which a short explanation of the relevance is included. In one operation, the motivation does not include more than a brief indication of the function of the person, or other indications of the characteristic. This indication can comprise a single word (e.g. biologist, toxicologist, phone number, fax). The CTIVD finds this method of motivation, that only includes a single word and no further explanation, to be illegal. The CTIVD notes that the latter cases are insufficiently traceable to the motivation of the request for permission, and that the framework must be included in that. The CTIVD notes that concerning every characteristic, at least the relevance of the characteristic must be (briefly) included in the list of selectors, and where necessary, a clear reference must be included (to an internal document) on the basis of which the relevance of the characteristic can be further assessed.

The CTIVD finds that in a certain list of selectors, multiple telecommunication characteristics have been included with the remark that these “probably” belong to a person that the Minister has approved sigint selection for. The CTIVD notes that the use of the first form of sigint search, as described in section 12.3, could help limit the characteristics included of the list of selectors to characteristics that the AIVD has determined could actually be related the person of interest.

The CTIVD finds that the list of selectors in certain operations has substantially increased over time. In one operation, the CTIVD observed telecommunication characteristics that were obtained through legal use of a different special power (such as phone numbers or email addresses). The CTIVD finds that all these characteristics where used for sigint selection by the AIVD. In nearly all cases, it was not indicated whom the number belongs to are what the specific relevance is. In fact the only link to the AIVD investigation was the circumstance that the persons associated with the characteristics have contacted a person of interest to the AIVD, without any indication of the nature of the contact or other relevant clues. The CTIVD notes that the mere contact with a person of interest, without the relevance of this contact to the AIVD’s investigation, is insufficient justification for including it in the list of selectors. The CTIVD considers it the be likely that it is possible to use less infringing means to determine what contacts are evidently irrelevant. The CTIVD thus finds the selection based on these telecommunication characteristics to be illegal.